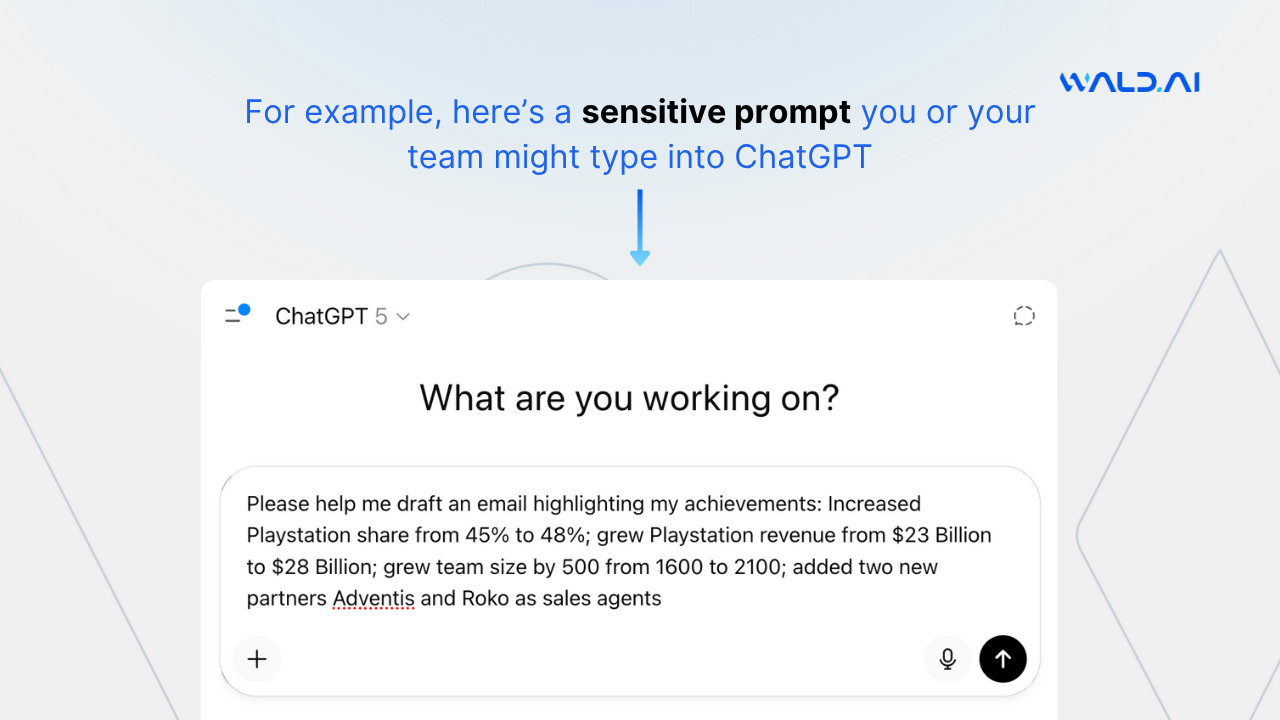

Everyone’s done it. You open ChatGPT, drop in a client report, maybe a few lines of code, and ask for help. It feels private. It’s not.

Once you hit Enter, that data doesn’t stay inside your network. It travels to OpenAI’s servers, where it’s processed, stored, and sometimes reviewed. You lose visibility the second it leaves your screen.

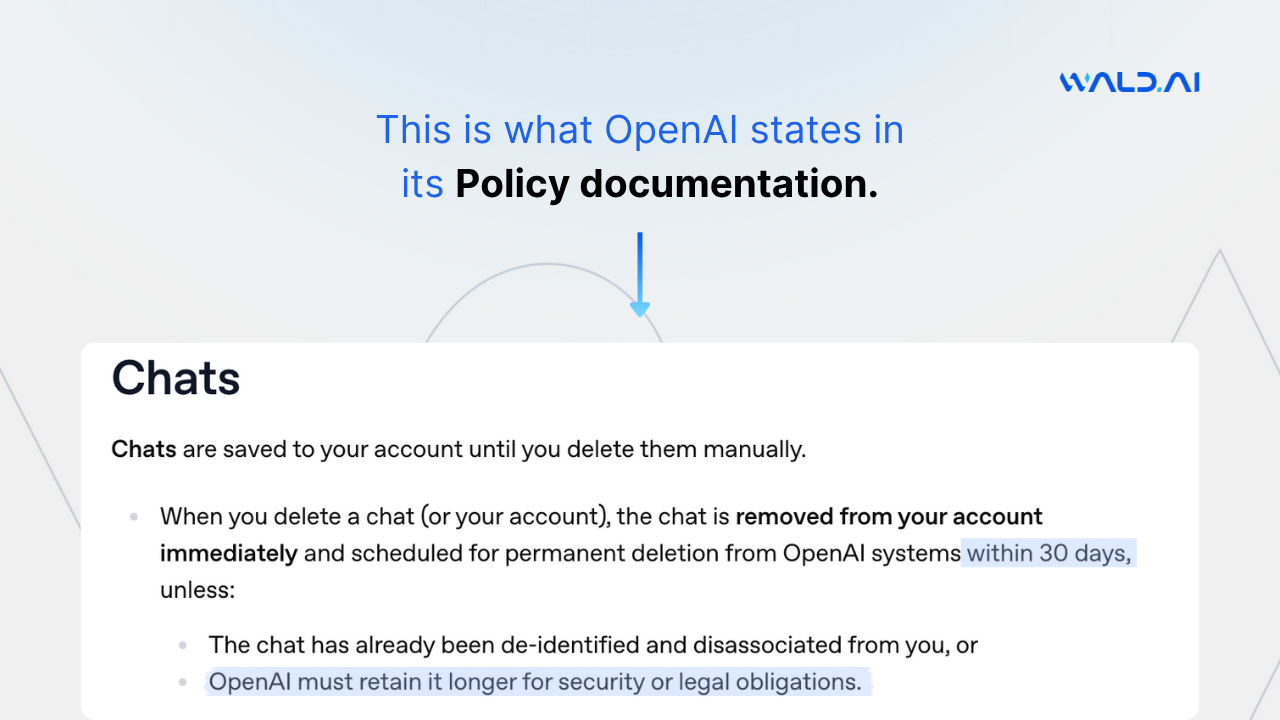

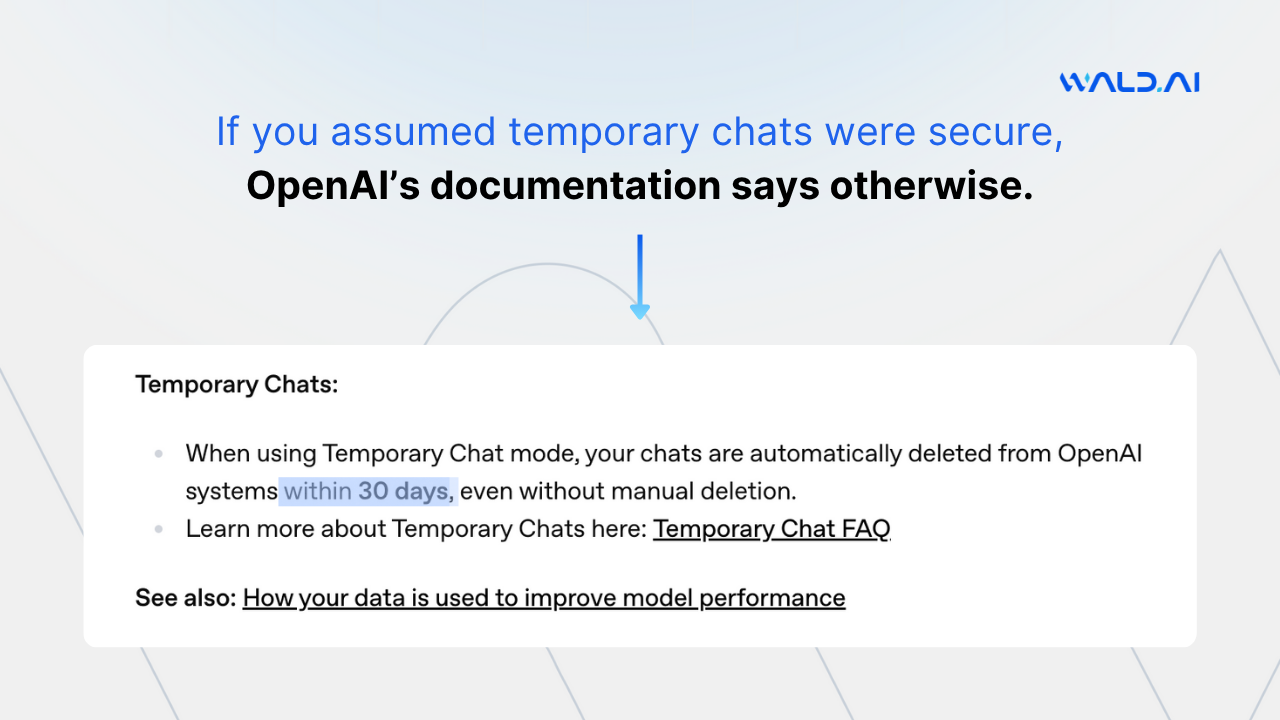

By policy, OpenAI keeps your prompts and responses for at least 30 days to detect abuse and “improve quality.” That means every request, every answer, every file snippet sits on their systems for a full month — or longer if flagged for inspection.

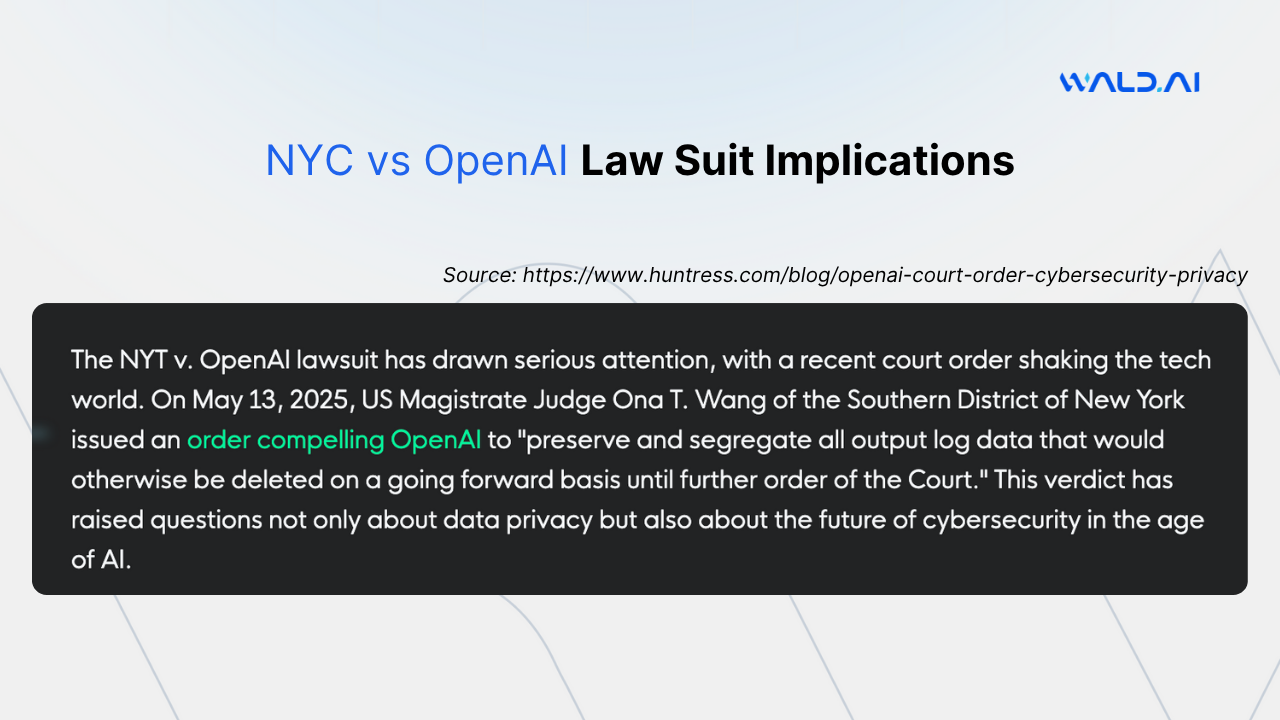

In fact, the NYC lawsuit against OpenAI forced the company to retain user data for legal reasons. So even if you delete your chat, it’s still there somewhere, held in compliance limbo.

That’s not hypothetical risk. That’s a live copy of your internal data sitting outside your control for 30 days straight.

Here’s the uncomfortable truth. Every system that stores sensitive data for “just 30 days” is a breach target. It’s not a question of if but when.

Attackers don’t need to break into your network anymore. They just need to wait until your employees send the data to someone else’s.

And because you can’t track what’s leaving or where it lands, you won’t even know what leaked — until it’s too late.

We’ve compiled a list of ChatGPT vulnerabilities here.

SOC 2, HIPAA, GDPR — all of them hinge on one thing: control. You need to know what data leaves your environment, where it’s stored, and how it’s used.

With ChatGPT and other public AI tools, you can’t guarantee any of that. You rely entirely on policy-level protection, not technical enforcement. The “we promise not to train on your data” clause sounds nice, but there’s no way to audit it.

For CISOs, that’s a nightmare. For regulators, it’s an open invitation.

When faced with this mess, most security teams do the obvious thing: block access.

But that doesn’t solve it. Employees still use AI — just on their personal laptops or phones.

That’s shadow AI, and it’s spreading fast. Every blocked tool drives more unmonitored usage. The very thing the ban was supposed to prevent becomes invisible and uncontrollable.

That’s where Wald steps in.

Wald lets your teams keep using ChatGPT, Claude, Gemini, or any other model — but with guardrails that actually work.

Here’s what happens under the hood:

It’s the same AI experience your team loves, minus the privacy risk that keeps CISOs up at night.

AI isn’t going away. Blocking it won’t protect your data.

What protects you is knowing exactly what goes in and what stays out.

So the next time someone on your team pastes company data into ChatGPT, ask yourself one question:

Do you still control that information once it leaves your screen?

If not, you need something like Wald watching your back.