Regulatory compliances in AI tools and systems have become non-negotiable. With AI enhancing productivity across industries and departments, the legalities are starting to catch-up.

Questions circling around user data and privacy have haunted AI organizations since their onset. Organizations are increasingly using these tools without being aware of the risks. Recently IBM noted that the global cost of every data breach has climbed to $4.44M in 2025.

In such times, AI compliance with internationally recognized privacy regulators acts as a necessary watchdog. We will explore what you should do to stay compliant and avoid penalties.

AI compliance means adhering to the relevant laws, regulations and ethical guidelines. It involves syncing AI systems with governance practices, ensuring development and deployment of such AI-powered systems in a responsible and unbiased manner. This safeguards privacy, security and fairness.

Key regulatory changes to stay updated with:

Compliance in AI is the shield that protects user data from unauthorized usage, prevents discrimination against specific groups and acts as a vigilante towards manipulation and deception of people. This enforces that no one can use AI-powered systems to invade individuals’ privacy or harm them.

Non-compliance also invites fines, penalties and strict actions against enterprises and individuals. Meta is currently in an appeal’s battle against their GDPR violations amounting to a massive €1.2 billion, the highest fine recorded.

While a Chief Compliance Officer is responsible for setting procedures and implementing them across an enterprise to ensure regulatory compliance, the role of a CISO and CTO are comprehensive for the overall monitoring and usage of data in a compliant manner.

A CISO is at the helm of regulating cybersecurity by designing and implementing security programs, they are also answerable for data breaches and held responsible for security risks within a company.

Security and compliance initiatives in the AI space have become increasingly challenging, data sanitization and redaction of Personally Identifiable Information (PII) and confidential enterprise data has become a priority.

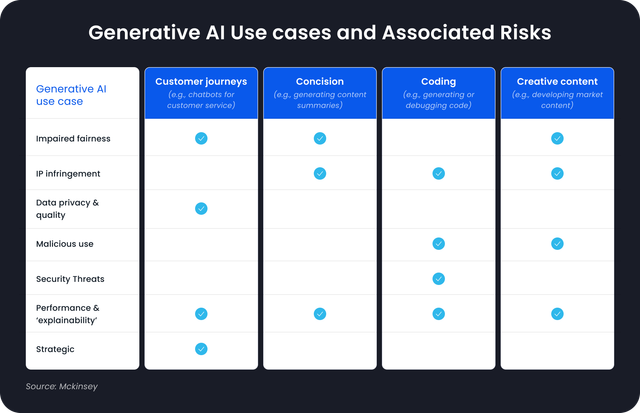

A survey conducted by Mckinsey revealed that a mere 18% organizations have an enterprise-wide council or board with the authority to make decisions involving responsible AI governance while 70% executives experience challenges with data governance. Wald.ai makes this process simpler and compliant, while leveling up productivity and protection.

Data privacy compliance is the ethical backbone of a data-driven organization.

It has become a catalyst for building trust with individuals, ensuring they have control over their personal information, and fostering a culture where data protection is ingrained in every aspect of business operations. By sticking to these ideas, organizations can lower risks, guard their image, and create a lasting digital space where people feel safe sharing their information.

Organizations are turning more to tech solutions to tackle compliance issues:

They’re using methods like data masking, differential privacy, and homomorphic encryption to protect sensitive info while still allowing data analysis. Adding AI and machine learning to privacy compliance helps organizations automate data protection tasks and spot unusual patterns. These technological advancements offer new opportunities for efficient and effective compliance management but come with their own set of issues.

Data governance is the process of setting and enforcing policies, standards, and procedures to efficiently manage data, ensuring quality and compliance with relevant laws. Data governance and AI compliance are closely linked, good data governance forms the basis for managing AI well.

However, there are certain challenges regarding ensuring compliance with the set policies, let’s understand them through examples.

In 2018, Amazon stopped using an AI hiring tool as it displayed bias against women. Later, it was found that the machine was trained on datasets of different candidates, predominantly men, so it started to display bias and prefer male candidates over women.

Since, AI systems rely on large datasets for training and development, their accuracy depends on the nature of their source. If the dataset turns out to be biased or inaccurate, it affects the reliability of the output produced by the AI system. It is important to check and ensure that the data used is relevant and accurate, although this can be challenging. One way to navigate this challenge is to research and choose reliable AI-powered systems.

Samsung placed a ban on the usage of ChatGPT when an employee accidentally uploaded highly confidential company data and it leaked.

Businesses using AI tools must make privacy and security an absolute priority. Using AI solutions and being assured of security is a cybersecurity dream. The challenge, however, persists in ensuring data security and safeguarding privacy rights.

The intelligibility and transparency of data are central to overcoming AI compliance challenges. To ensure AI systems comply with data privacy laws, businesses must conduct in-depth research on how and where the processed data is stored (and whether or not it is being stored).

Interestingly, the U.S. government and its federal agencies are using AI for detecting corporate frauds and violations. The SEC has leveraged machine learning and AI to identify insider trading while the FTC has leaned towards using AI to protect consumers and enforce privacy. While the lack of a federal AI law has led to a patchwork of state regulations and has left businesses to jump through hoops to ensure AI compliance, the government does seem to be keen on balancing innovation and privacy.

Federal Laws and Regulations:

State Laws and Regulations:

The EU’s GDPR and California’s CCPA are prominent examples of data privacy regulation acts. These regulations emphasize transparency, individual control, and the importance of data security measures.

These regulations require businesses to gain explicit consent, provide transparency around data usage, and allow individuals to exercise their rights over their data. Non-compliance can result in severe financial penalties and reputational damage.

Industry Specific Compliances to Watch Out

Trends in Governance - What to Expect Next?

The regulatory space will undergo massive changes with emerging new models. US regulations are different from GDPR but the growing concerns around privacy and data are likely to influence the introduction of stricter laws and comprehensive regulations similar to GDPR.

With an influx of AI models, threat detection using AI and adoption of preventive measures to identify and mitigate potential breaches is gaining momentum.

Internal cooperation is being called out for establishing globally accepted standards of AI development and deployment.

Gartner predicts that companies using AI governance platforms will see 30% higher customer trust ratings and 25% higher regulatory compliance scores compared to competitors by 2028.

To keep up with regulatory changes, traditional methods will evolve to factor the reputational costs. This will be achieved through a combination of automated systems and governance experts.

Further translating into demand for specialized professionals. The market will grow to back specialized areas such as handling incident-testing for weaknesses, checking for compliance, reporting on transparency, and writing technical documents. To meet this need more trained AI governance experts will have to enter the field. These experts will need training to do the jobs and learning programs and certificates will be key, with new ones like the International Association of Privacy Professionals’ certificate for AI Governance Professionals.

Collaboration across stakeholders to ensure compliance in AI will lead the way to stress-free usage of new technologies and optimizing future productivity.

Effective strategies involve fostering a culture of ethics and AI compliance among employees at the outset while also leaving room for mishaps and establishing strong monitoring practices.

Train Employees

Creating comprehensive employee training frameworks will help educate employees on data privacy laws and overall AI ethics. This will allow the personnel responsible for handling AI systems to be updated with the latest compliance requirements, security protocols, and ethical considerations.

Develop Comprehensive Incident Response Plans

The next step is to establish and regularly update comprehensive incident response plans. These are essentially risk mitigation strategies and risk management plans. A well-prepared response will allow you to quickly mitigate damage and ensure compliance with legal requirements.

Incorporate the Right Software Solutions The best strategy is incorporating the right software solution to ensure AI compliance with regulatory requirements and safe AI usage throughout your organization.

There are multiple software tools you can choose from. When making the decision, make sure the platform offers comprehensive data protection solutions such as:

When looking for a software solution to ensure AI compliance, navigate toward options that also offer comprehensive enterprise management features. Such features are important to ensure smooth compliance management by gaining increased visibility into the processes, including access to dashboards and analytics and monitoring activities with audit logs.

Wald.ai is one of the best tools offering all the capabilities mentioned above.

Wald.ai empowers organizations and amplifies potential by allowing organizations to securely integrate generative AI solutions into their daily work. The platform’s data security features include data anonymization, end to end encryption, identity protection, and other enterprise features that allow your company to leverage the power of AI tools while maintaining compliance with data protection regulations.