OpenAI has launched their first AI agent called Operator, currently available only to ChatGPT Pro users in the U.S bearing a hefty price tag of $200.

Earlier this week OpenAI also rolled out their Tasks feature and speculations about their Superintelligence has been rife. Let’s understand the capabilities of both ChatGPT Tasks and Operator.

AspectChatGPT TasksChatGPT OperatorDefinitionSpecific objectives or goals ChatGPT fulfills based on user input.Tools or mechanisms that extend ChatGPT’s capabilities to interact with external systems.ScopeLimited to internal functionalities (e.g., generating text, coding).Enables interactions with the web, APIs, or real-world systems (e.g., buying tickets).AutonomyUser-driven; ChatGPT acts only on provided instructions.Can autonomously navigate websites, complete transactions, or access external data.ExamplesWriting emails, translating text, summarizing documents and more.Surfing the web, ordering groceries, booking flights, or managing workflows.Interaction ModeFully conversational; limited to interpreting and responding to prompts.Mimics human-like interactions online, including filling forms, clicking, and navigating.AvailableUse with Plus, Pro or Teams subscriptionPro only

‘Tasks’ is conversational and bounded, while ‘Operator’ unlocks advanced, real-world utility by interacting with external platforms. With these advanced capabilities, let’s understand the hype about OpenAI Operator and if it delivers on its claims.

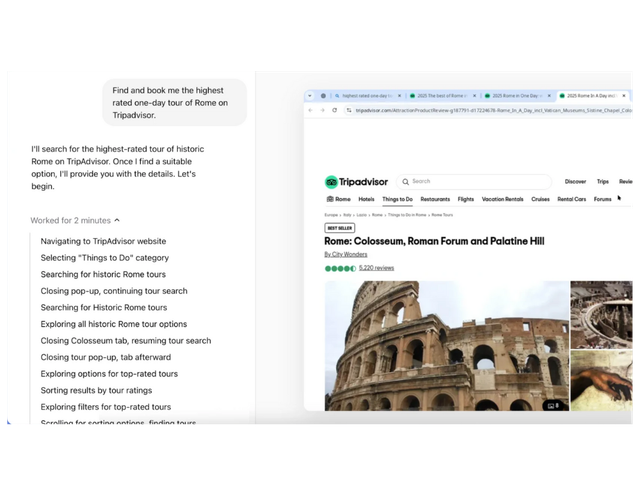

It allows you to save time by assigning a virtual agent to perform tasks on the web; automate your dinner reservations, book concert tickets, upload an image of your grocery list and it will add all of it to the cart and buy it for you. It is capable of using the mouse, scrolling, surfing across websites and emulating the behaviour of a person.

Basically, be hands-free and let it automate your tasks.

Image source: OpenAI

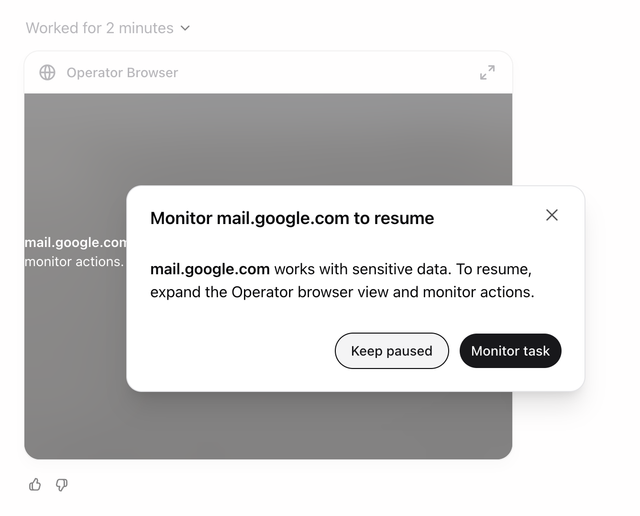

Automation is great, but can ‘Operator’ go off the rails and misuse such autonomy? There are preventive measures OpenAI has claimed to put in place such as confirmation notifications before executing high-impact tasks, disallowing certain tasks and a ‘watch mode’ for certain sites. But, then again, these are preventive measures and being cautious and not giving absolute reigns to your computer and data is the best practice.

Image source: OpenAI

Operator runs on a model called Computer-Using Agent (CUA). It combines GPT-4o ability to analyse screenshots and browser controls such as mouse and cursor. They have claimed it to be better than Anthropic and DeepMind’s agents and superior across industry benchmarks for agents being able to perform tasks on a computer.

It works with screenshots, limited to the browser interface it is able to view. This helps it to reason with what steps it will take next and modify its behavior depending on the errors and challenges it faces.

It also activates a ‘Take Over’ mode while interacting with password fields and sensitive information to be put in a website. Since, Operator performs tasks in a browser only, in the near future OpenAI wants to leverage these capabilities through an API which will allow developers to build their own apps.

If you ask the model to perform unacceptable tasks, it is trained to stop and ask you for more information or it may cause the model to break down. This prevents it from executing tasks that have external side effects.

CUA is far from perfect and its limitations are acknowledged by OpenAI, they’ve said that they don’t expect it to perform reliably in all scenarios all the time.

Neither can it handle highly complex and specialized tasks, you also don’t get unlimited access even though Operator can perform multiple tasks simultaneously, it is still limited to a usage limit that is updated daily.

It can also outright refuse to carry out tasks for security purposes. This curbs the agent from hallucinating, say, it doesn’t use your credit card to directly make an absurd purchase.

OpenAI’s Operator is their boldest move in building agents, but it needs to be refined to do more tasks while ensuring security.

Your Operator screenshots and content can be accessed by authorized OpenAI employees. Although you can opt out of letting OpenAI use your data for model training, you can’t completely restrict openAI employees from accessing it. It’s best to not let sensitive data slip in their hands.

Operator stores your data for 90 days regardless of you deleting your chats, browsing history and screenshots during the chat. You can change other privacy settings in the Operator’s Privacy and Security settings tab.

OpenAI has been finicky with its data storage practices since the beginning, but if you need to access ChatGPT securely you can consider tools such as Wald.ai, that provide you safe access to multiple AI assistants.

It’ll be interesting to see how Operator performs in comparison to Anthropic’s Computer Use and Google DeepMind’s Mariner.

OpenAI’s collaboration with DoorDash, eBay, Instacart, Priceline, StubHub, and Uber is a testament to complying with service agreements and not acting with complete autonomy.

Once this feature is available with all other plans, it will not only save time for users’ by automating everyday tasks but also change the course of how virtual assistants like Alexa and Siri have been used. Taking it a notch higher, with allowing agents to use the internet by connecting it with your PC and performing tasks for you.

The new wave of AI agents are here, and with further refinements they will inevitably become a daily part of our lives.